Introduction

In the contemporary fast-paced world, we've encountered the emergence of "deepfakes," a fusion of sophisticated technological terms referring to Artificial Intelligence crafting highly realistic counterfeit content. This technology is similar to crafting videos and images that appear authentically genuine but are fabricated. Let's delve into its origins, its intentions, and why exercising caution is imperative.

The Genesis of Deepfakes considers deepfake technology as a blend of advanced machine learning and artistic image creation capabilities. Intelligent algorithms, like GANs, can generate and assess synthetic data until it closely mirrors the original. It's akin to constructing an artificial realm that becomes indistinguishable from reality.

What's exactly a Deepfake: definition and its Harmful Effect on Cybersecurity

In late 2017, Motherboard covered a video circulating on the Internet that had Gal Gadot's face digitally imposed on an existing explicit video, creating the illusion that the actress was participating in the depicted actions. Despite its falseness, the video's quality was sufficiently convincing that a casual viewer might easily believe it to be authentic, or simply not be concerned about its authenticity.

An unidentified Reddit user, self-identified as "deepfakes," claimed responsibility for creating this manipulated video. The term "deepfakes" stems from the technology employed in producing such manipulated content, utilizing deep learning methodologies. Deep learning is a subset of machine learning techniques, themselves a part of artificial intelligence. In machine learning, models use training data to learn and improve for specific tasks. In deep learning, models autonomously discern data features, enabling better classification or analysis – operating at a more intricate level.

Deep learning isn't limited to analyzing images or videos of people but extends to diverse media forms such as audio and text. In 2020, Dave Gershgorn reported on OpenAI's release of "new" music by famous artists, generated from existing tracks. Programmers successfully created realistic tracks mimicking Elvis, Frank Sinatra, and Jay-Z's styles. This led to a legal dispute between Jay-Z's company, Roc Nation LLC, and YouTube over these AI-generated tracks.

The emergence of AI-generated text poses a substantial challenge in the realm of deepfakes. While identifying weaknesses in image, video, and audio deepfakes has been feasible, detecting manipulated text is more challenging. Replicating a user's informal texting style using deepfake technology is a plausible scenario.

All categories of deepfake media – images, videos, audio, and texts – possess the capability to imitate or modify an individual's representation. This underlies the principal threat of deepfakes, extending beyond their use to the broader concept of "Synthetic Media" and their utilization in disseminating disinformation.

What is a Generative Adversarial Network (GANs)?

One fundamental technology employed in generating deepfakes and similar synthetic media involves the utilization of a "Generative Adversarial Network" (GAN). This GAN mechanism involves the utilization of two machine-learning networks to produce artificial content through a competitive process. The initial network, known as the "generator," is fed with data representing the desired content type to enable it to grasp the characteristics of that particular data category.

Subsequently, the generator endeavours to craft new instances of that data replicating the original data's features. These newly created instances are then evaluated by the second machine learning network also trained but through a slightly different method, to learn and identify the characteristics of the same data type. This second network, referred to as the "adversary," strives to pinpoint flaws in the presented instances, rejecting those that fail to exhibit the authentic traits of the original data, thus labelling them as "fakes." The rejected samples are then provided back to the generator for improvement in its data creation process. This iterative process continues until the generator generates synthetic content that convincingly fools the adversary into recognizing it as authentic.

The inaugural practical application of GANs dates back to 2014, pioneered by Ian Goodfellow and his colleagues. Their demonstration showcased the capability to fabricate synthetic images of human faces. Although GANs are frequently utilized for human faces, their application extends to various types of content. Moreover, the level of detail (i.e., realism) in the data used to train GAN networks directly influences the credibility and realism of the generated output.

Examples and cases of Deepfakes and dilemmas surrounding them

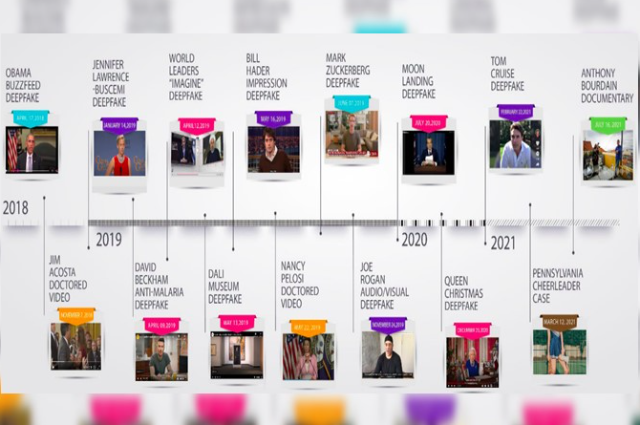

When considering a deepfake face exchange, many people often recall videos like the one featuring Bill Hader transforming into Tom Cruise, Arnold Schwarzenegger, Al Pacino, and Seth Rogan on David Letterman’s show in 2019. This serves as an illustration of non-harmful deepfake use. However, as observed, there exists a darker facet to AI/ML, stemming not from the technology itself but from its users.

Society must recognize the inherent danger of deepfakes used by malicious individuals. One notably harmful application of face swap technology is in deepfake pornography. Instances occurred where actors Kristin Bell and Scarlett Johansson were manipulated into several pornographic videos. One of these fabricated videos, labelled as "leaked" footage, garnered over 1.5 million views. Women lack the means to prevent malevolent actors from creating deepfake pornography.

The utilization of this technology to harass or inflict harm on private individuals who lack public attention and the necessary resources to counter falsities should be alarming. The full repercussions of deepfake pornography have only begun to surface.

Another technique within deepfakes is "Lip Syncing." This method involves matching a voice recording from one context to a video recording from another, making the video's subject appear to authentically say something. Lip-syncing technology enables users to make their targeted individual say whatever they desire using recurrent neural networks (RNN). In November 2017, Stanford researchers released a paper and model for Face2Face, an RNN-based video production model allowing third parties to manipulate the speech of public figures in real-time.

Since then, deepfake technology has rapidly expanded and become more accessible to the general populace. As these methods become more available, the risk of harm to private individuals, particularly those who are politically, socially, or economically vulnerable, will inevitably rise.

Deepfakes timeline and list of famous people who faced such Cyber Threats

The most common form of DeepFakes

The prevalent methods employed in creating Deepfakes are illustrated along a chronological timeline. The earliest technique predating deepfake and AI/ML advancements is known as the face swap.

During the 1990s, the advent of commercially available image editing software, like Adobe© Photoshop™, enabled individuals with access to computers to modify images, including the merging of one person's face or head onto another person's body.

Presently, the technology behind producing a convincing face swap incorporates AI. This technological advancement permits an adversary to interchange one person's face with another's face and body. Utilizing an Encoder or Deep Neural Network (DNN) technology, the adversary can execute a face swap. Training the face swap model using an auto-encoder involves mapping pre-processed samples of person A and person B onto a shared intermediate compressed latent space utilizing identical encoder parameters.

Upon training the three networks, to perform a face swap from person B to A, the video (or image) of A is progressively input frame by frame into the common encoder network, and then decoded by person B's decoder network. Numerous applications exist that facilitate face swapping, yet not all employ identical technology. Some of these applications include FaceShifter, FaceSwap, DeepFace Lab, Reface, and TikTok. Platforms like Snapchat and TikTok offer significantly reduced computational and skill requirements, enabling users to generate various manipulations in real time.

Similarities Across Scenarios

Despite the extensive range of potential attacks utilizing deepfakes, the scenarios outlined in this document follow a general progression that could be applied to engage with relevant stakeholders and advise on measures to mitigate such threats. These stages echo those delineated in a paper authored by Jon Bateman and encompass:

- Intent: Any deepfake-driven attack commences with a malicious actor selecting a target for the attack.

- Investigating the Target: At this stage, the malicious actor conducts research on the target to collect images, videos, and/or audio from diverse sources like search engines, social media platforms, video-sharing sites, podcasts, news outlets, etc.

- Crafting the Deepfake, Phase 1 - Model Training: The malicious actor leverages the gathered data to train an AI/ML model to replicate the appearance and/or voice of the target. This could involve using bespoke models or commercially available applications, depending on the actor's resources and technical expertise.

- Crafting the Deepfake, Phase 2 – Generating the Content: The malicious actor produces a deepfake portraying the target engaging in actions or uttering statements they never actually did. This could be achieved using the actor's hardware, commercial cloud services, or third-party applications.

- Distributing the Deepfake: The malicious actor disseminates the deepfake, whether through targeted delivery to an individual or more broadly to a wide audience via methods like email transmission or posting on social media.

- Viewer(s) Response: Viewers react and respond to the deepfake's content.

- Victim(s) Response: The target of the deepfake reacts and responds, often engaging in "damage control." While the victim is also a "viewer," their response may differ significantly due to their unique role in the attack. Options for mitigating these actions exist at each of these stages.

What kind of challenges are faced in Deepfakes?

- Data requirements for detection: Tools designed to detect deepfakes typically necessitate extensive and diverse datasets to reliably identify manipulated content. Despite efforts by technology firms and researchers to offer datasets for training detection tools, the current sets available alone do not suffice. To maintain effectiveness, detection tools need continual updates incorporating increasingly sophisticated data.

- Lack of automated detection: Existing tools cannot conduct comprehensive and automated analyses that consistently spot deepfakes. Ongoing research endeavors aim to develop automated systems capable of not only identifying deepfakes but also providing insights into their creation process and evaluating the overall authenticity of digital content.

- Adapting to detection methods: Efforts to pinpoint deepfakes often lead to the emergence of more intricate techniques for creating them. This constant evolution necessitates regular updates to detection tools to remain effective in this ongoing "cat and mouse" scenario.

- Limitations of detection: Even if detection technology were flawless, it might not deter the effectiveness of fake videos disseminated as disinformation. This is because many viewers may lack awareness of deepfakes or may not invest the effort to verify the authenticity of the videos they encounter.

- Varied social media moderation standards: Major social media platforms maintain differing guidelines for moderating deepfakes, resulting in inconsistency across these platforms.

- Legal complexities: Proposed laws or regulations addressing deepfake media could potentially raise concerns about individuals' freedom of speech and expression, as well as the privacy rights of those misrepresented in deepfakes. Additionally, enforcing federal legislation aimed at combating deepfakes could present significant challenges.

To what extent has it progressed and is it easy for everyone to make DeepFakes?

Crafting a deepfake is within reach for individuals possessing basic computer proficiency and access to a personal computer. Online computer programs, accompanied by instructional guides on generating deepfake videos, are readily accessible. Nevertheless, to fashion a reasonably convincing deepfake, these applications generally demand a substantial volume of facial images, ranging from hundreds to thousands, for the faces intended for swapping or manipulation. Notably, celebrities and government officials are the primary targets for such manipulations. Crafting more persuasive deepfakes utilizing Generative Adversarial Networks (GANs) necessitates heightened technical expertise and resources. As artificial neural network technologies have advanced swiftly in tandem with more potent and abundant computing capabilities, the capacity to fabricate authentic deepfakes has also progressed significantly.

Functions of Deepfakes: the disturbing character and impact on other areas

- Film and Series: Ever witnessed a movie featuring an actor from the past? Deepfake technology facilitates these scenarios, crafting scenes with actors absent in reality.

- Political Deception: One unpleasant use of deepfakes involves creating false recordings of politicians engaging in actions or uttering words they never did, distorting facts and clouding truth in politics.

- Concerns in Cybersecurity: Malicious actors leverage deepfakes to impersonate others, deceiving individuals into revealing critical information. This can result in financial theft, identity misrepresentation, or other online criminal activities.

- Deceptive Communications: Imagine receiving a message purportedly from a friend, except it's not them - it's a deepfake! This technology can mislead us into believing falsehoods.

- Disturbing Videos: Deepfake technology harbors an unsavory aspect, generating fabricated videos blending real individuals' faces with inappropriate content. This behavior is not only unkind but also detrimental to individuals' emotions and reputations.

Why Caution Is Necessary?

- Unauthorized Use of Identities: Deepfakes can deploy someone's face without their consent, akin to someone snapping a photo of you without permission, but more intrusive as it can manipulate you into actions or statements you never made.

- Erosion of Trust: If widespread, deepfakes might blur the line between truth and falsity online, eroding trust in what we perceive or hear, thereby undermining credibility.

- Legal Ambiguity: Regulations concerning Deepfakes remain ambiguous, making it challenging to assign responsibility in adverse situations. This underscores the need for improved laws to prevent malicious use of this technology.

- Journalism's Dilemma: Deepfakes have the potential to disrupt journalism by disseminating false news, and casting doubts on the credibility of information presented on television or the internet.

Deepfakes leading to violation of Right To Privacy?

Prolific use of deepfake videos is poised to encroach upon the privacy of individuals whose fabricated videos surface. The Supreme Court, in Justice KS Puttaswamy (Retd.) v Union of India case, acknowledged the Right to Privacy as a fundamental right. A nine-judge bench emphasized that privacy safeguards an individual's autonomy, empowering them to regulate various facets of their lives. The court underscored privacy as an element of human dignity, integral to a life lived with genuine freedom. Furthermore, the apex court stressed the centrality of privacy in a democratic state.

Addressing individual autonomy, the Court asserted that privacy primarily concerns individuals, allowing interference only when justified and equitable, not arbitrary or oppressive. The Court extended protection to both the physical and mental aspects of an individual's privacy. The Right to Privacy also acknowledges safeguarding one's life from public scrutiny, aligning with the constitutional perception of a human being capable of exerting control over their life aspects. Emphasizing individual human value and personal space contributes to safeguarding individual autonomy.

The Court emphasized that an implicit aspect of the Right to Privacy includes the choice of information released into the public domain, with individuals serving as primary decision-makers. Consequently, it's evident that deepfake videos substantially violate individuals' Right to Privacy across various dimensions. Despite the absence of explicit legislation prohibiting deepfakes, legal claims against their dissemination would likely prevail in a court of law.

Updated Data Bill's quietness regarding generative AI tools is concerning

The exclusion of artificial intelligence and the absence of any provisions to govern emerging technology and tools such as generative AI in the recent iteration of the Digital Personal Data Protection (DPDP) Bill has raised concerns among experts. They fear this omission might create a gap in the privacy law, leading to confusion and uncertainty regarding the regulation of continuously evolving technologies.

Experts highlighted the increasing prevalence of deep fakes generated by AI tools and emphasized the necessity for the DPDP to address this issue. Especially with upcoming elections in major democracies like India and the US, the circulation of manipulated images or altered audio and videos to damage the reputation of public figures becomes a significant concern.

Many experts noted that existing privacy-centered legislation in various jurisdictions is proving inadequate in tackling challenges posed by generative AI. For instance, to be pointed out that while the General Data Protection Regulation (GDPR) aimed to provide transparency and clarity two decades ago, the EU is currently developing a separate regulatory framework specifically for AI.

Jaspreet Bindra, Founder and MD of Tech Whisperer warned about the potential impact of AI-generated content on voters' decisions during the upcoming elections in India and the US, noting that many voters might not recognize such content as AI-generated, potentially influencing their choices.

Moreover, while it might be easier to monitor and regulate larger intermediaries such as major social networks and significant Gen AI players, the open-source nature of generative AI means that even a small, obscure company or individual developer could create and distribute objectionable content on a large scale and at a rapid pace, posing challenges for effective tracking, management, and regulation.

Industry data suggests significant growth in the AI market in India, with a projected Compound Annual Growth Rate (CAGR) of 20.2%. The estimated growth in this sector is expected to increase from $3.1 billion to $7.8 billion between 2020 and 2025. The number of AI startups in India has surged fourteenfold since 2000, accompanied by a sixfold rise in investments in these startups.

Identifying a Deepfake: Techniques to Recognize Them

Detecting deepfakes remains challenging as technology advances. Here are tactics to identify them:

- Scrutinize facial and vocal cues: Look for inconsistencies in facial expressions, eye movements, and lip synchronization. Notice any odd facial features or artificial speech patterns.

- Inspect backgrounds: Spot anomalies in lighting or unusual elements in the background.

- Analyze lighting and shadows: Notice any irregularities in lighting on the subject's face or discrepancies in shadows.

- Consider the context: Evaluate if the content seems remarkably sensational, contentious, or unexpected.

- Employ search tools: Utilize reverse image and audio searches to verify if the content has been reused.

- Seek expertise and tools: Consult professionals and utilize specialized software designed for deepfake detection.

- Maintain a skeptical approach: Approach doubtful online content with a cautious mindset.

Remember, although these techniques assist, there's no foolproof method for identifying all deepfakes.

Strategies to Counteract

- Technological Solutions - Utilizing Blockchain to Combat Deepfakes: Axon Enterprise Inc, the leading manufacturer of police body cameras in the US, has enhanced its security apparatus, which could assist in debunking deepfake videos. The release of Axon's Body 3 camera has emerged as vital evidence in cases of alleged police misconduct, particularly when defense attorneys raised doubts about video integrity, citing apparent alterations to shorten scenes or modify timestamps. The updated security camera has introduced added safeguards, making captured footage inaccessible for playback, download, or modification by default unless authenticated, such as through a password.

- Accountability and Responsibility of Social Media Platforms: Photographs and images qualify as sensitive personal data under the Digital Personal Data Protection Act, 2023, capable of identifying individuals. Deepfakes therefore, constitute a breach of personal data and a violation of an individual's right to privacy. While publicly available data might not fall under the law, social media giants will still need to take responsibility if the information on their platforms is used to create misinformation. Moreover, dissemination of such misinformation often occurs through social media channels , necessitating controls to prevent it. YouTube recently announced measures mandating creators to disclose whether content is produced using AI tools. The imperative is to establish a standardized approach that all platforms can adopt, applicable across borders.

The Union Minister of Electronics and Technology has declared that the government is set to introduce a framework addressing the misuse of technology for AI and 'Deepfake' on November 24, 2023.

Conclusion

In the end, I would like to quote an anonymous quote someone rightly said, “The age of deepfake whether you participate in it or not, it's coming faster than you think”.

As the sophistication of deepfake technology progresses, people need to remain alert and knowledgeable about the potential dangers it carries. Through comprehending the process of generating deepfakes, acknowledging the challenges they bring, and acquiring the skills to detect them, we can collaboratively strive to minimize their influence on society. Keeping oneself informed and practicing responsible consumption of media will play a pivotal role in combating this evolving menace.

The Indian Government must address the potential emergence of deepfakes at its early stages. When questioned about deepfake technology, the IT minister of India acknowledged the government's awareness but indicated that the impact of deepfake technology might only be confined to generating fake news. However, the reality differs, as deepfakes can be created for entertainment purposes yet still violate an individual's privacy. Hence, this matter requires significant attention before it escalates into an uncontrollable widespread problem.

. . .

References: