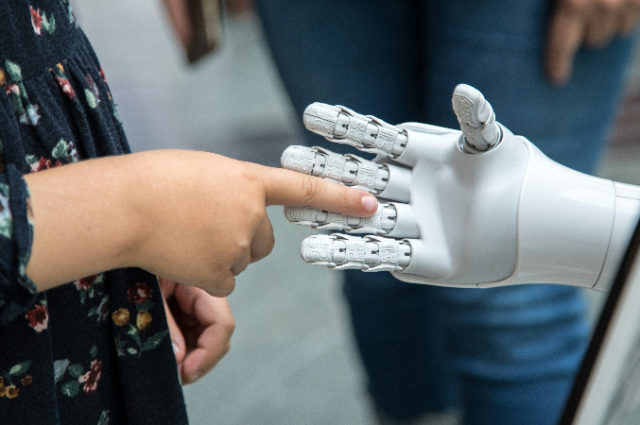

Photo by Katja Anokhina on Unsplash

The Premise: The Crisis of the Digital-Humanity Gap

We stand at a profound inflection point in civilization. For decades, the mantra of the digital age was "move fast and break things," driven by an unwavering belief in technology's inherent goodness and its power to optimize every corner of human existence. The result is the ubiquitous, invisible infrastructure of algorithms, data streams, and automated systems that govern our attention, our choices, and our societies.

Yet, this triumph of optimization has created a deep and widening fissure: The Digital-Humanity Gap.

This gap is the chasm between the staggering efficiency of technology and the messy, slow, complex, and sometimes irrational needs of the human soul. It is the distance between the algorithmic imperative—which seeks engagement, prediction, and profit—and the human imperative—which demands dignity, autonomy, empathy, and meaning.

To close this gap and steer our future, we must stop asking “What can technology do?” and start asking the only question that matters: “What does it mean to be human in a world remade by code?”

This essay is a call to recognize that the greatest innovation of the next century will not be a new algorithm, but the design of a digital world that places the flourishing of Homo sapiens—our cognitive, emotional, and social well-being—as its primary, non-negotiable telos (purpose).

The Cost of Convenience:

The Algorithmic Colonization of the Self

The most dangerous transformations in the digital age are not catastrophic; they are incremental and seductive. They arrive wrapped in the packaging of convenience, efficiency, and personalization, making them difficult to critique without sounding like a Luddite railing against progress. The reality, however, is that the optimization of our lives has led to a systematic colonization of the self.

The Erosion of Autonomy and Cognitive Bandwidth

Algorithms, particularly those governing social media and personalized feeds, are primarily designed for engagement, not enlightenment. They succeed by exploiting core psychological vulnerabilities: the need for validation, the fear of missing out (FOMO), and the human tendency toward tribalism.

Psychological research confirms a tangible toll:

- Decision Fatigue and Cognitive Load: By filtering the world into easily digestible, highly personalized feeds, algorithms reduce the cognitive friction necessary for critical analysis. While this increases efficiency, it also contributes to what many experts call "cognitive overload." Individuals, constantly bombarded by optimized information, suffer from decision fatigue, struggling to maintain sustained concentration and often preferring instant gratification over deep, meaningful work.

- The Polarization Trap: The optimization for engagement inevitably prioritizes content that is emotionally charged—often divisive, fear-inducing, or sensational. This creates echo chambers that reinforce existing beliefs (attitudinal polarization), reducing empathy and social cohesion across different groups. As one search result noted, this insular information environment has been linked to higher levels of loneliness, despite the perceived connection.

- The Loss of the Messy Draft: Advanced Generative AI is the latest, most powerful tool in this colonization. By offering instant "first drafts," "summaries," and "brainstorming ideas," it bypasses the necessary struggle—the messy draft—that characterizes true intellectual creation. The value of human thought lies not just in the output, but in the internal process of synthesis, contradiction, and frustration that forges genuinely novel ideas. When we rely on AI to structure our initial thoughts, we risk becoming perpetual editors of the probable, rather than architects of the improbable. We prioritize retrieval over inquiry.

This convenience comes at the cost of our most human capacities: the ability to sustain deep attention, to encounter friction that sparks new thought, and to build the complex mental map of the world without a filter.

The Problem of Data Dignity

The foundation of the entire digital infrastructure is the monetization of our existence. Our clicks, our locations, our pauses, and our consumption patterns are aggregated, processed, and weaponized into predictive models. The concept of data dignity—the idea that our personal information has an inherent value that should not be simply extracted and exploited—is the central ethical crisis of our time.

In the digital age, we have reversed the fundamental ethical principle laid out by Immanuel Kant: treating people as an end in themselves, never merely as a means. By reducing the human user to a data point (a means) for profit and engagement (the company's end), the current digital economy fundamentally violates human dignity. The drive for optimization has consistently prioritized corporate efficiency over user autonomy.

The Philosophical Framework: From Efficiency to Flourishing

To establish a "Humanity First" doctrine, we must anchor our efforts in established ethical and philosophical traditions. This is not a technical problem to be solved by code, but a moral problem to be solved by principled design.

Kant and the Unconditional Value of the Human

As the search results reminded us, Kant’s critical philosophy provides a powerful lens. Kant argued that humans are rational subjects, characterized by the capacity for reflection and autonomy. True freedom, in the Kantian sense, is not doing whatever you want, but having the capacity to set and follow moral rules grounded in reason.

In the digital context, this translates into three non-negotiables:

- Dignity of the Rational Subject: Technology must never erode our capacity for rational thought or moral choice. Algorithmic nudges that manipulate behavior without our transparent knowledge violate our autonomy.

- Meaningful Human Control: As proposed in policy discussions, ultimate responsibility and oversight for consequential decisions (e.g., in justice, finance, or medicine) must remain with a human being. Machines are tools, not moral agents. We must reject the notion of giving machines an "electronic personality or identity" if it confuses the line of accountability.

- The Categorical Imperative of Design: We must ask: Could the principle behind this technology (e.g., constant surveillance for optimization) be universalized without fundamentally harming humanity? If a product requires the constant degradation of attention or the promotion of tribalism to succeed, then it is morally defective.

Augmentation, Not Replacement: The Augmentation Paradigm

The pursuit of "Humanity First" is best encapsulated by the Augmentation Paradigm. This philosophy asserts that the purpose of technology is not to replace human intellect or labor, but to complement and enhance our unique human abilities.

As one policy paper suggested, intelligent technologies should be seen as a support to human decision-making, not a replacement. This requires a profound cultural shift among tech designers, moving away from the Silicon Valley obsession with full automation and disruption, and toward the principles of co-creation and servitude.

Example: The Surgeon and the Robot: A robot arm may perform a more precise cut, but the surgeon retains the holistic view, the judgment, the empathy for the patient, and the ultimate responsibility. The robot augments the surgeon’s hand; it does not replace the surgeon's mind. This model must be applied to the digital sphere, ensuring that all AI tools serve to amplify our uniquely human traits—creativity, critical thought, and compassion—rather than sideline them.

The New Social Contract: The Path to a Human-Centric Digital World

To move from principle to action, we require a new social contract that applies human rights frameworks directly to the digital ecosystem. This requires simultaneous action across three fronts: Regulation, Design, and Education.

The Regulatory Imperative (Policy)

Governments and international bodies must establish non-negotiable legal frameworks that enforce the "Humanity First" principle. Key policy recommendations must include:

- Enshrining the Right to Cognitive Privacy: This goes beyond data privacy (like GDPR). It is the right to have one's mental space—the environment of thought and attention—protected from deliberate, psychological manipulation by profit-driven algorithms. It means restricting opaque systems that prey on addiction and emotional vulnerability.

- Mandatory Algorithmic Impact Assessments (AIA): Before deploying any AI system that impacts high-stakes areas (justice, employment, finance), companies must conduct a public, third-party audit to assess its potential for bias, social harm, and psychological damage. As UNESCO recommends, responsibility and accountability require that AI systems be auditable and traceable.

- Data Fiduciary Duty: Legislative bodies should establish that companies that hold personal data are not merely custodians, but fiduciaries, legally bound to act in the best interests of the individual, not the shareholder. This dramatically shifts the incentives away from perpetual extraction.

Implementing "Digital Sustainability": As recommended in policy discourse, AI initiatives must be measured against their impact on long-term sustainability and the well-being of future generations. This must include measuring the environmental cost of massive AI training and deployment.

The Design Shift (Industry)

The creators of the digital world must adopt new ethical frameworks that prioritize human well-being over metrics like "daily active users" or "time spent on platform."

Human-Centric Design Principles: Designers must shift from Persuasive Design (designed to change behavior) to Supportive Design (designed to support a pre-defined human goal). This includes:

- Defaulting to Slowness: Designing in "friction" or "speed bumps" for high-stakes decisions (e.g., requiring a 5-second pause before posting an angry comment).

- Transparency by Design: Mandating that the "Why" behind an algorithmic recommendation be easily accessible and understandable.

- Interoperability: Requiring platforms to allow users to easily move their social graphs and data between services, thus breaking the current lock-in model that forces constant engagement.

Prioritizing Cognitive Health: Industry must commit to the Proportionality.

Principle: The use of AI must not go beyond what is necessary to achieve a legitimate aim. If the goal is a product sale, the means of manipulation should be strictly proportional, not addictive. They must actively design for reduction in anxiety, depression, and loneliness, rather than passively fueling these states through optimization.

The Educational Foundation (Individual)

Ultimately, digital citizenship is the responsibility of the individual. We must cultivate Digital Literacy and Awareness—not just knowing how to use the tools, but knowing how the tools are using us.

- The New Liberal Arts: Digital ethics, media literacy, and AI consciousness must be integrated into all levels of education. Every student—from arts to engineering—must wrestle with the ethical antinomies of technology.

- Practicing Cognitive Minimalism: Individuals must intentionally carve out "No-Prompt Zones" and "Digital Silences" in their daily lives. This involves actively seeking out unoptimized information, confronting challenging viewpoints, and committing to the "messy draft" in their own

intellectual pursuits. It is a commitment to fostering the human capacity for deep work—the ability to focus without distraction—which no machine can replicate.

The Stewardship of the Future

The challenge of "Humanity First in the Digital Age" is nothing less than the moral stewardship of our collective future. We are not just building tools; we are building environments that shape our consciousness, our culture, and our capacity for moral action.

The current trajectory, driven by the seductive but dehumanizing force of unbridled optimization, is unsustainable. It leads toward a society that is highly efficient but profoundly empty; one that has all the answers but has forgotten how to ask a truly original question. To secure a higher position in the digital future—a position of moral and intellectual leadership—humanity must demand of itself and its creations a new contract. This contract must state clearly: Technology must serve the human good, not define it.

Our dignity, autonomy, capacity for empathy, and ability to reflect are not mere inputs to be optimized, but the sacred outputs that must be protected. The time for passive consumption is over. The time for conscious, ethical, human-centric design—the architecture of a digital future where the human soul can still flourish—is now. The silence must be reclaimed.

"We must not let the digital age simply ask 'What works?' We must first ask: 'What makes us whole?'”