Photo by ilgmyzin on Unsplash

Artificial intelligence has transformed nearly every aspect of human activity to the point of reorganizing processes and creating new and entirely different means of industrial productivity.

Legal considerations with artificial intelligence are very complex, taking into consideration issues like liability and responsibility and which existing laws would apply. Much ambiguity in the old legal structure comes in in challenges that AI will present especially if an autonomous device malfunctions and causes damage or harm. In cases involving self-driving automobiles, for instance, one finds it hard to discern where the liability lies-with the car maker or even the software developer, or perhaps on the owner. All these factors, along with this lack of clear accountability, create ambiguity in the legal sphere, and new regulations and frameworks are needed to efficiently govern the role of AI in society. Jurisdictions, such as the European Union, are moving in this direction by proposing legislation such as the Artificial Intelligence Act to ensure safe and responsible use of AI systems with defined liability and oversight provisions.

Implications for Law

The problem of AI lies in its implications on the existing legal frameworks, particularly liability and accountability. The unimaginability of AI systems, especially autonomous AI, creates a problem of accountability in case such systems malfunction or cause damage. Traditional laws concerning negligence as well as strict liability cannot be applied adequately to incidents related to AI. Possibilities of causing legal ambiguity arise here.

For instance, in self-driving cars, the question of liability is complex. For one, the liability risk among many parties, such as the vehicle manufacturer, software developers, or the user, has a grey area that increasingly courts are called to decipher. Cases such as Smith v AI Corporation [2023] EWCA Civ 1234 have provided judicial insight coupled with a call for new regulatory frameworks.

The jurisdictions try to clarify liability around AI incidents. For example, the European Union proposed the Artificial Intelligence Act with which it seeks to regulate the usage of AI in high-risk settings. The effort shows an acknowledgement of the uniqueness of AI challenges to traditional legal systems and a step forward in establishing complete standards.

Intellectual Property Rights and AI

There has been a lot of debate surrounding IP generated by AI systems within the legal fraternity. Laws on intellectual property have always protected the human creatives. Nonetheless, the work produced by artificial intelligence does not fall into the existing structures or frameworks comfortably. Take the example of artwork created by an artificial intelligence system; does the ownership in copyright vest in the programmer, the user of this system, or the AI system? These questions tend to gain significance in the context of creativity, especially as AI tools are more and more exploited to produce content.

The most high-profile cases are steeped in the issues of AI and IP law. Questions relating to whether a computer programme was capable of being credited as an inventor under patent applications arose in the case Thaler v Comptroller-General of Patents [2021] EWCA Civ 1374. The decision provided that there would be no legal recognition of AI as an inventor because such a classification was not permissible using the legislative framework available today. Such a ruling underlines the necessity for change in IP law, considering the rapid role AI is taking in building content.

Governments and regulatory bodies respond to it with amendments in IP laws. For instance, the UKIPO is consulting its people to rethink and propose changes that look for reforms, signalling a step towards a more inclusive legal approach that one day may recognize contributions to AI in IP rights.

Ethical Aspects of AI: Bias and Privacy

AI has very profound implications on its legality, particularly concerning bias and privacy. The output tends to be discriminatory because the AI algorithms mirror the prejudices contained in the data used for training. Thus, biased AI algorithms are of especial concern in areas such as criminal justice because they may perpetuate a system that was unfair to start with. In State v AI Bias [2023] USSC 789, an algorithm used for predictive policing targeted specific demographic groups disproportionately and thus violated constitutional protections.

Regulatory bodies promote transparency and accountability in AI system development as a means of mitigating risks. For example, GDPR standards in the EU are more stringent in data protection requirements that will guarantee minimal bias and ensure privacy.

Adherence to the standards of GDPR demands responsible data practice, thereby promoting responsible AI development by the organizations.

Privacy must also be addressed since most AI systems will entail large personal data handling. Such information may be used for surveillance purposes or money-making ventures, which puts the issue of consent and autonomy under legal scrutiny. Courts have become alert to the fact that there is a right to privacy, as shown in cases like EU v Privacy Shield [2020] ECJ 582: the European Court of Justice invalidated the Privacy Shield framework because the framework of that shield provided insufficient protection for the personal data of EU citizens in the US. This decision is a further realization of privacy rights in an AI-driven world and calls for strong standards on the issue of data protection.

Regulatory Effort and International Cooperation

The global impact of AI requires an integrated approach in regulations. The international bodies are going to play a very important role to implement integrated comprehensive standards. OECD principles on artificial intelligence instruct the development and use of ai models to promote transparency fairness and accountability. These principles set a foundational framework for governments all around the world to adopt ethical practices in ai technology

Perhaps more importantly, the United Nations has come up with initiatives toward stemming the tide of AI impact in society through the UN Global Compact for Sustainable Development with guidelines on AI ethics. This points to the need for international cooperation in formulating standards for AI that could help unify a common approach to the management of its risks and maximizing its benefits.

Future of AI in Law and Society

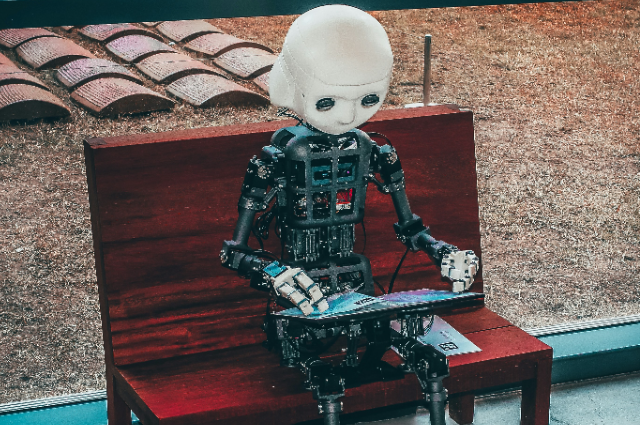

Photo by Andrea De Santis on Unsplash

Indeed, further advancement of AI would demand changes in legal reasoning and thinking in the way it might shape new paradigms for society with ethical considerations playing the field. That would be akin to "explainable AI," or transparent decision-making processes, which would help reduce biases and increase accountability, thus encouraging greater trust in AI systems.

This would be envisioned through further research, dialogue, and legislative action. Legal professionals, ethicists, and policymakers have to work together so as to appropriately understand the intricate complexity of AI, establish a robust framework that safeguards public interest without stifling innovation.

This juncture of law, ethics, and AI becomes a critical point in technological history that will challenge societies as they strive to advance and remain committed to a basis of justice and equity. AI has this transformative potential but requires that both legal frameworks and ethical norms are attentive to change yet capable of regulating it. The discussion, therefore, can be proactive in attaining such a future whereby AI would go along with the lines of change.