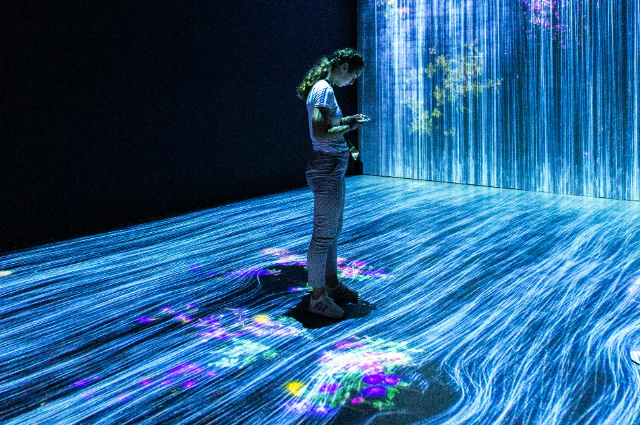

Photo by Mahdis Mousavi on Unsplash

In the rapidly evolving field of technology, the advancement of artificial intelligence (AI) has reached unprecedented heights. AI is now capable of performing complex tasks such as recognizing faces, understanding natural language, and even mimicking human emotions. One area where AI has made significant strides is in the realm of empathy and emotional quotient (EQ). However, when comparing AI empathy and EQ to their human counterparts, the differences become apparent. In this blog, we will delve into the nuances of AI empathy and EQ, and explore how they measure up against human empathy and EQ, ultimately pondering the possibility of bridging the gap between machines and emotions.

Understanding AI Empathy

AI empathy refers to the capacity of machines to recognize and understand human emotions. This technological advancement has been made possible through the use of algorithms, machine learning, and data analysis. By analyzing vast amounts of data, AI models are trained to recognize patterns of behavior and language that correlate with specific human emotions. For example, chatbots integrated with AI empathy can identify anger, sadness, or happiness in a user's text responses and adjust their tone accordingly.

AI and Emotional Quotient

Emotional Quotient, or EQ, is the ability to recognize, understand, and manage our own emotions, as well as to empathize with others. While human EQ has long been recognized as a crucial skill for effective communication and social interaction, AI has begun to explore this area as well. AI systems can detect emotions by analyzing facial expressions, tone of voice, and even physiological signals. They can generate appropriate responses and engage in deeper conversations, mimicking human empathy to a certain extent.

The Limitations of AI Empathy and EQ

Although AI empathy and EQ have made significant progress, they still possess inherent limitations. Machines lack the ability to truly experience emotions; they can only simulate and respond based on learned patterns. While AI can predict emotions based on inputs, it doesn't have personal experiences or emotional depth to draw upon. Moreover, AI models lack true understanding and perspective, limiting their ability to provide genuine empathy. The nuances of human emotions, such as cultural context and subjective experiences, pose challenges that AI is yet to overcome.

Human Empathy and EQ

Human empathy and EQ are deeply rooted in our own lived experiences, emotions, and the ability to understand others' perspectives. Unlike machines, humans possess innate emotional intelligence that enables them to understand and connect with others on an emotional level, fostering genuine empathy. Our ability to interpret subtle cues, body language, intuition, and personal experiences contribute to the empathy we exhibit towards others.

Bridging the Gap between Machines and Emotions

While AI may not be able to match human empathy and EQ entirely, there are ways in which both can complement each other. AI can aid in mental health support, providing automated assistance and resources. It can assist humans in recognizing and managing their own emotions, facilitating personal growth. Additionally, AI-driven virtual agents and chatbots can provide support in various industries, including customer service, where quick and accurate responses are required.

However, it is crucial to remember that AI should never replace human empathy and EQ entirely. Real human connections are invaluable, and genuine empathy involves human vulnerability, shared experiences, and understanding that machines cannot replicate.

AI empathy and EQ have undeniably marked significant progress in the world of technology. However, it is important to recognize the limitations of AI and the irreplaceability of human empathy and EQ. While machines can simulate emotions and provide valuable support, true connection and understanding will always remain within the realm of human interactions. As we advance the field of AI, finding the right balance between technology and human emotions is essential to creating a harmonious future where both can coexist and thrive.

Breakthroughs in AI emotional quotient (EQ) and self-awareness are continuously being explored and developed. While these advancements may not match the deep emotional intelligence found in humans, they showcase the potential of AI in understanding and responding to emotions. Here are a few notable examples:

1. DeepFace:

Developed by Facebook AI Research (FAIR), DeepFace is a facial recognition technology capable of identifying and analyzing human emotions based on facial expressions. By analyzing facial features and movements, DeepFace can accurately recognize emotions such as happiness, sadness, anger, and surprise. This breakthrough has applications in areas like user experience testing, market research, and improving human-computer interactions.

2. Affective Computing:

Affective computing is an interdisciplinary field that combines AI and psychology to create systems that can recognize, interpret, and respond to human emotions. MIT's Media Lab has been at the forefront of this research. For example, the lab has developed wearable devices with sensors that can measure physiological signals like heart rate, skin conductance, and body temperature, enabling machines to detect and understand emotions in real-time. This technology has applications in healthcare, mental health monitoring, and stress management.

3. Chatbots with Emotional Intelligence:

Developers have been incorporating emotional intelligence into chatbots to create more engaging and empathetic interactions. AI chatbots are trained on vast amounts of data, including conversation patterns and emotional cues, allowing them to respond in a more empathetic manner. For instance, Woebot, a mental health chatbot developed by Stanford University researchers, uses natural language processing and AI to engage in conversations that replicate therapeutic interactions. These chatbots provide a non-judgmental platform for users to express their emotions and receive support and guidance.

4. AI-Generated Art:

While not directly related to emotional self-awareness, AI-generated art demonstrates the ability of machines to evoke emotional responses. Generative adversarial networks (GANs) and deep neural networks have been used to train AI models that can create art, music, and literature. These creations may trigger various emotions in humans, such as awe, sadness, or happiness. AI-generated art is an exciting area bridging AI and emotional experiences, blurring the lines between human and machine creativity.

It is important to note that these breakthroughs in AI emotional intelligence are still in their formative stages and have their limitations. The goal is not to replace human emotional intelligence but to assist and augment it in various domains, from mental health support to user experience design. Continued research and development in this field will undoubtedly pave the way for more profound AI-human interactions and experiences.