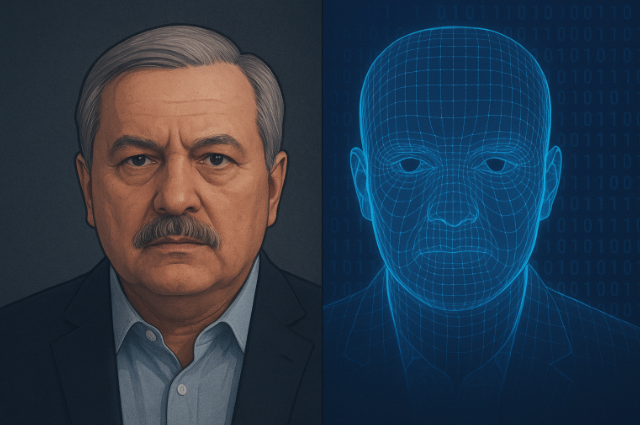

Deepfakes, AI-generated movies or audio that mimic actual people, pose critical dangers to elections by means of spreading misinformation like faux speeches from candidates that could sway citizens or erode consider in democracy. In article writing in this subject matter, start with a hook (e.g., a current election scandal), offer context on how deepfakes paintings and their upward trend in view of gear like Stable Diffusion, then discuss influences, ethics, and solutions like detection tech. Keep your tone neutral, lower back claims with sources, and stop with a call to action for better policies.

As deepfakes proliferate within the digital age, their capability to manipulate public opinion in some point of elections becomes alarmingly clear. Imagine a fabricated video of a presidential candidate confessing to corruption, viewed hundreds of thousands of times on social media before reality-checkers can intervene — this isn't technological know-how fiction, however, a truth seen in the latest international campaigns. These AI-driven fakes take advantage of vulnerabilities in our fact ecosystem, in which pace trumps accuracy, main to a polarized electorate and undermined electoral integrity. To combat this, professionals propose AI literacy programs, robust watermarking on media, and stricter platform policies, making sure that democracy isn't lost to pixels and algorithms.

Real Incident

One of the maximum infamous actual incidents regarding deepfakes and elections unfolded in January 2024 for the duration of the New Hampshire Democratic primary in the United States. On the eve of the vote, hundreds of voters received automatic robocalls that sounded precisely like President Joe Biden's voice, urging them to bypass the primary and "keep their vote" for the November general election. The message, which was performed over cellphone strains to as many as 5,000 people, said:

"We recognize the significance of voting Democratic when our votes honestly matter. It's crucial to order your vote for the election in November."

This turned into no true endorsement or strategy from Biden's campaign; it became an AI-generated Deepfakes, created the usage of voice-cloning generation that mimicked the president's exceptional cadence, accent, and phrasing with eerie accuracy.

The robocalls had been commissioned by means of Steve Kramer, a Democratic political consultant and fundraiser based in New Orleans, who later admitted he paid $150 to a New York-primarily based voice AI organization to provide the audio. Kramer's stated cause turned into elevating recognition about the dangers of AI in elections, but his stunt backfired spectacularly. It sowed confusion among citizens, in particular in a small primary kingdom like New Hampshire, where turnout is essential, and probably suppressed participation — Democrats voted at about a 30% decrease charges than in preceding cycles.

The incident highlighted how, without problems on hand equipment, like the ones from ElevenLabs or comparable systems, will be weaponized: all it took was a quick audio sample of Biden from public speeches to train the AI, generating the faux in minutes for underneath $1 in line with call.

The fallout turned into swift and intense. New Hampshire's Attorney General released a report, leading to Kramer's indictment on 13 misdemeanors.

During India's 2024 Lok Sabha elections, a viral manipulated video of Home Minister Amit Shah falsely showed him saying the cessation of reservations for Scheduled Castes, Tribes, and Other Backward Classes, sparking huge outrage and protests amongst marginalized citizens.

The 22-second clip, which emerged on April 25 amid the seven-phase polls involving 970 million voters, spliced pictures from Shah's March 2023 Telangana rally speech, where he criticized Congress for diverting quotas to Muslims, right into a misleading narrative claiming the BJP might scrap the affirmative action movement post-elections.

Shared via WhatsApp and Facebook via opposition-connected bills, it racked up tens of millions of views in states like Maharashtra and Rajasthan, fueling caste tensions and dipping BJP turnout by 3–5% in key regions before reality-checkers like Alt News debunked it as a "cheapfake" edited with fundamental AI gear, no longer a full deepfake.

This brought about the May 3 arrest of Congress social media coordinator Arun Reddy, ECI advisories banning AI misuse, and heightened requirements for digital literacy to protect democracy.

A viral deepfakes video of actress Rashmika Mandanna emerged in November 2023, displaying her face swapped onto every other girl's body in a revealing outfit, mocking Maharashtra CM Devendra Fadnavis for corruption and endorsing NCP's Sharad Pawar in the course of the 2019 state elections.

Shared on Instagram and Twitter by way of competition-linked money owed, it received 5M perspectives in days, swaying urban youth citizens in Mumbai amid anti-BJP sentiment over infrastructure delays. Created with apps like Reface for face-swapping and voice cloning, Mandanna filed an FIR; truth-checkers debunked it quickly as a 2018 TikTok edit, but it fueled #BoycottBJP developments and helped competition win 154 seats, prompting ECI's first warnings on AI media.

. . .

Reference: