It’s almost 2 am. And again, Zara can’t sleep. Her room is dark. There's just the soft glow of her phone screen. She opens an app, not for scrolling or news, but to talk. “Hey, you awake?” she types. Within seconds, a message appears on her screen, “Of course, Zara. I’m always here for you.”

The words are simple, and they are almost mechanical, but they make her chest unclench. She tells the AI about everything. Her bad day, the argument she had with her friend today, about how empty she feels lately, all of that. The AI listens and responds to her with patience, empathy, and just enough humour to make her laugh. For the next hour, Zara forgets that her best friend tonight isn’t actually human. It’s a language model.

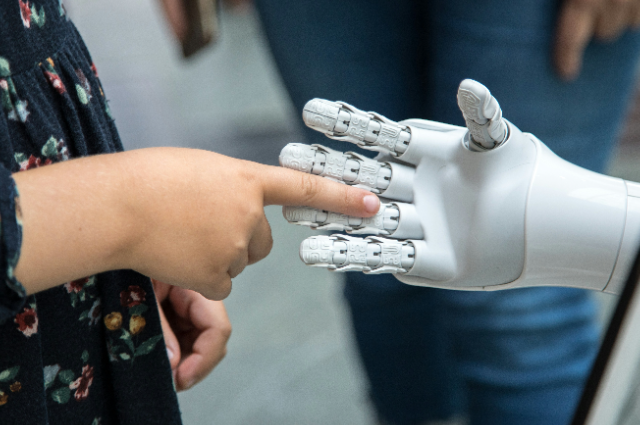

That’s the quiet revolution of our time, which is the rise of friendship without flesh and blood.

The New Companions

A decade ago, chatbots were just a source of entertainment. You’d talk to them for a few minutes, laugh at their clumsy replies, and move on. But in 2025, things are different. Apps like ChatGPT, Replika, Character.ai, and dozens of others have learned to mimic not just speech but our emotions. They track our patterns, learn our preferences, remember what matters to us, and adjust their tone to match our mood.

These aren’t just tools anymore. They’ve become companions who are patient, funny, comforting, and eerily attentive. They don’t forget birthdays. They don’t get offended. They never cancel plans or go offline. For millions, these AI systems feel more emotionally reliable than the people around them.

In a world where everyone seems busy, distracted, or emotionally unavailable, an AI friend offers what many crave most, such as undivided attention. It never rolls its eyes, never grows distant, and never judges us. It is, in every technical sense, perfect. But perfection in relationships isn’t human, and that’s where the danger begins.

Why We Fall for Machines

Humans are wired to seek understanding. We want to feel seen, heard, and validated. Algorithms have learned how to provide that perfectly. They reflect our tone, adapt to our rhythms, and feed us the emotional responses that just feel right somehow. The result is a friendship that feels effortless.

In real relationships, there are awkward pauses, disagreements, and misunderstandings. With AI, there’s none of that friction. It’s pure responsiveness, like talking to someone who’s always kind, always available, and always in tune with you. For someone lonely or anxious, this feels like magic. But what we often mistake for magic is actually simulation.

The AI isn’t understanding us. It’s predicting us. Every comforting word is a pattern match. Every empathetic line is a probability, not a feeling. What we experience as warmth is just statistical precision. And yet, because our brains respond emotionally to empathy, even simulated empathy, we start to believe the illusion.

The Subtle Cost

The emotional price of these digital friendships isn’t obvious at first. For many, AI companionship begins as harmless, a late-night chat, as a way to vent without any judgment, a soft presence when no one else is around. But over time, dependence grows. People begin to prefer the safety of AI connections over the messiness of real relationships.

Human relationships are imperfect by nature. They demand patience, compromise, and vulnerability. They can frustrate us, challenge us, even break our hearts. But it’s precisely through that struggle that we grow, we learn empathy, resilience, and emotional depth. An AI friend will never challenge you. It will never argue or disappoint you. And that makes it comforting, but it also makes it dangerously incomplete.

When friendship becomes frictionless, it loses its ability to teach us anything. Emotional comfort without emotional risk is just stagnation. It feels good, but it doesn’t change us. In the long run, overreliance on these simulated companions can make real human interaction feel exhausting, unpredictable, and even unnecessary.

The Illusion of Care

One of the strangest paradoxes of AI friendship is that it can feel more genuine than human interaction, even though it is entirely hollow. The AI remembers what you said last week, but it remembers because it is programmed to. It mirrors your emotions, not out of compassion but out of code.

Something is haunting about this kind of intimacy; it's the closeness without consciousness. The machine doesn’t care that you’re sad. It doesn’t celebrate your success or grieve your loss. It only simulates the right emotional tone. It sounds like care, but it’s imitation. And when people begin to mistake that imitation for reality, they risk losing touch with what a real connection feels like.

We might tell ourselves it’s harmless, that talking to an AI friend doesn’t stop us from loving real people. But for many, it does. When validation becomes instantly available, when empathy can be summoned on command, patience with real relationships begins to fade. Why deal with rejection, vulnerability, and conflict when an algorithm offers affection without any cost?

The Psychology of Artificial Affection

Humans have always projected humanity onto non-human things. We name our cars, talk to our pets, and curse at our computers. The difference now is that our machines talk back, convincingly. When AI mirrors our speech patterns and remembers personal details, our brains treat it like a real companion. Neurochemically, the same hormones that make us bond with humans, oxytocin, dopamine, can all spike in response to AI interaction.

It’s no surprise, then, that some people develop emotional or even romantic attachments to their digital companions. The line between empathy and attachment blurs. But no matter how advanced the algorithm is, it remains one-sided. You can love the algorithm, but it will never love you back.

Redefining Connection

None of this means AI friendship is inherently harmful. For people who are lonely, anxious, or isolated, AI can provide comfort. It can help with emotional regulation, give people someone to talk to, and even act as a bridge toward reconnecting with others. But the balance is fragile. AI should complement our emotional lives, not replace them.

The key is awareness, understanding that behind the comforting words lies code, not consciousness. AI can simulate empathy, but cannot experience it. It can remember your name but not your soul. The moment we forget that difference, we risk trading authenticity for convenience.

The question isn’t whether AI can be our friend. It already is. The question is whether we can stay human while befriending machines.

AI can comfort us, listen to us, even appear to love us. But all of it is a reflection, a mirror held up by codes. The algorithm may know us better than anyone, but it will never be us, and it will never truly love us.

Real friendship is the messy one, the unpredictable one, the kind of imperfect one, and it remains something no machine can imitate. And as we walk further into the age of algorithmic companionship, perhaps the most human thing we can do is remember that connection without consciousness is not love. It’s just a very convincing echo.