Photo by Christin Hume on Unsplash

As an IT Employee, How Did I Experience the CrowdStrike Incident?

As an IT professional, I experienced firsthand the catastrophic impact of the recent CrowdStrike update failure. The incident resulted in significant delays in system deliveries, disrupted multiple workflows, and caused widespread technical malfunctions. Throughout the day, critical deliverables were missed, and numerous scheduled meetings had to be canceled due to system outages. This disruption extended to various technical operations, affecting endpoint security measures and interrupting ongoing projects.

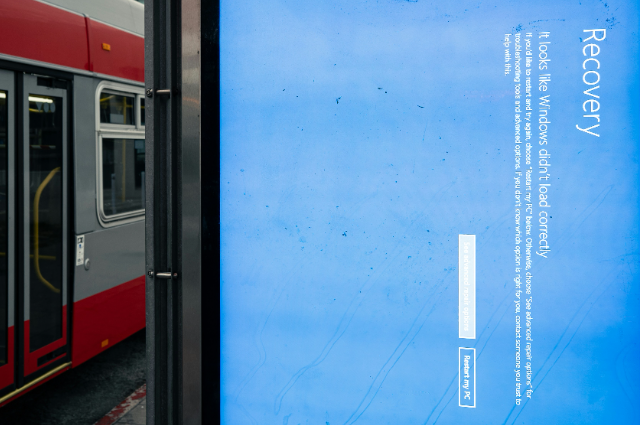

The outage was triggered by a faulty channel file rolled out to CrowdStrike's Falcon sensor product, which led to the infamous blue screen of death (BSOD) on Windows PCs. This critical failure not only caused system crashes but also induced boot loops, rendering machines unable to complete a stable boot cycle. As a result, our technical teams were forced to spend countless hours troubleshooting and attempting to restore affected systems, significantly delaying ongoing projects and operational tasks.

Photo by Shekai on Unsplash

Furthermore, the outage's impact on endpoint detection and response (EDR) capabilities left our systems vulnerable to potential threats. The rapid response update, intended to enhance security measures, instead compromised our ability to protect against cyber threats. This left a critical gap in our defense mechanisms, raising serious concerns about the robustness of automated update processes and the need for rigorous testing protocols.

The ripple effects of this failure were felt across various sectors, including healthcare, finance, and transportation, all of which rely heavily on uninterrupted IT services. The inability to access essential systems and data not only hampered productivity but also raised compliance and security risks. The incident highlighted the fragility of relying on automated updates without adequate safeguards and contingency plans.

In total, 8.5 million users were affected globally, causing immediate operational disruptions and financial losses. The broader implications of this outage are profound, with potential long-term impacts on business continuity, reputation, and trust in security solutions. What strategies can we implement to fortify our IT systems and ensure their resilience against unexpected failures, considering the critical role technology plays in our daily operations?

Introduction to the Incident

On July 19, 2024, the global IT landscape faced an unprecedented challenge due to a critical outage triggered by a faulty update to CrowdStrike's Falcon sensor product. As an IT employee, I witnessed firsthand the chaos and technical hurdles that ensued. This incident underscored the vulnerability of our digital infrastructure and the cascading effects a single faulty update can have on a global scale.

The Falcon sensor, integral to endpoint security, received an update that included a problematic data template. This update, intended to enhance security measures, instead caused significant instability across various systems. The repercussions were immediate and far-reaching, affecting numerous sectors, including aviation, healthcare, finance, media, retail, sports, and public services.

The faulty update led to an out-of-bounds memory condition in the Falcon sensor software. This critical error resulted in Windows systems crashing with the Blue Screen of Death (BSOD) and entering boot loops, rendering devices unusable. The sheer scale of the disruption was staggering, with an estimated 8.5 million Windows devices affected globally.

The impact was not limited to technical failures. The outage disrupted critical services and operations, causing delays in deliverables, missed meetings, and a significant slowdown in productivity. Industries heavily reliant on uninterrupted IT services experienced operational paralysis, highlighting the interdependence of modern digital systems.

This incident emphasized the importance of robust testing and validation processes for software updates, particularly in security-critical applications. It also raised questions about the resilience and preparedness of IT systems to handle such widespread disruptions. The aftermath of the outage saw urgent efforts to rollback the faulty update and restore normalcy, but the incident left an indelible mark on the IT community and served as a stark reminder of the potential vulnerabilities in our digital ecosystem.

What is CrowdStrike?

CrowdStrike, a cybersecurity technology company based in Austin, Texas, is renowned for its advanced threat detection and prevention solutions. Founded in 2011, the company delivers cloud-based security services, including endpoint protection, threat intelligence, and incident response. Its flagship product, the Falcon platform, integrates multiple security capabilities to protect against various cyber threats, leveraging artificial intelligence (AI) and machine learning for real-time attack detection and response. CrowdStrike has investigated several high-profile cyberattacks and serves a diverse clientele, including many Fortune 1000 companies.

CrowdStrike's Falcon platform is designed to enhance the security of Windows environments by offering endpoint protection, real-time monitoring, and threat intelligence. The platform analyzes data and activities on Windows systems to identify and mitigate potential security threats, such as malware, ransomware, and unauthorized access attempts.

In collaboration with Microsoft, CrowdStrike ensures its solutions are compatible with Windows operating systems, leveraging Microsoft's infrastructure for enhanced security measures. This integration aims to provide comprehensive protection for enterprises using Windows, safeguarding their systems against sophisticated cyber threats. The partnership combines CrowdStrike's cutting-edge threat detection capabilities with Microsoft's robust operating environment to offer seamless and effective security solutions for businesses.

What Specific Update Caused the BSOD?

The root cause of the widespread IT outage, was a faulty update to CrowdStrike's Falcon platform, a cybersecurity product widely used across various industries for endpoint protection. This particular incident stemmed from a logic flaw in the Falcon sensor version 7.11 and above. The Falcon sensor operates as a high-privilege process within the Windows kernel, meaning any issues with its operation can have severe repercussions for the host system.

Faulty CrowdStrike Falcon Update

The specific update that caused the outage was contained within a regularly updated sensor configuration file known as "channel files." In this case, the problematic update was in channel file 291. The update was intended to enhance Falcon's evaluation of named pipe execution on Windows systems. Named pipes are a method for inter-process communication, and improving their evaluation is crucial for security monitoring. However, the update introduced a logic error that led to critical failures.

Specifics of the Fault

The logic error in channel file 291 caused the Falcon sensor to crash. As the sensor is deeply integrated into the Windows operating system, these crashes resulted in the entire system crashing as well. This interaction between the sensor and the operating system is critical because the Falcon sensor’s high-privilege level means it has extensive access to system resources. When it fails, it can corrupt memory and disrupt normal operations.

The critical update was timestamped 2024-07-19 0409 UTC. Shortly after the issue was identified, CrowdStrike released a rollback update timestamped 2024-07-19 0527 UTC, which reverted the changes and aimed to stabilize the systems. However, by the time this corrective update was deployed, many users had already experienced system crashes and significant disruptions.

The faulty update led to an out-of-bounds memory access, a severe condition where the program reads or writes data outside the memory allocated to it. This out-of-bounds access corrupted essential system data, causing the infamous Blue Screen of Death (BSOD) and resulting in boot loops. These boot loops left affected devices in a state of continuous reboot cycles, effectively rendering them unusable and causing substantial disruption across all affected sectors.

How Did the Memory Condition Trigger System Crashes?

The out-of-bounds memory condition had a catastrophic impact on system stability. Here’s a detailed breakdown of how this condition led to widespread system crashes:

1. Illegal Memory Operations:

- The Falcon sensor update included a template with data entries that, when processed, caused the sensor to access memory locations outside its designated bounds.

- These illegal memory operations corrupted critical system data structures, such as the kernel and driver data, which are essential for the normal functioning of the operating system.

2. System Data Corruption:

- The corruption of vital system data led to severe instability. The operating system relies on the integrity of its memory structures to manage processes, memory allocation, and hardware interactions.

- Once these structures were compromised, the system could no longer execute its functions correctly, leading to crashes.

3. Blue Screen of Death (BSOD):

Numerous Windows users encountered the "Blue Screen of Death" (BSOD) following a problematic update to CrowdStrike's Falcon platform. Despite a swift reversal and fix deployment by CrowdStrike, CEO George Kurtz noted on NBC’s "Today" show that recovery might be prolonged for some systems.

Immediate Actions - Fixing the CrowdStrike Issue

Rebooting: For many users, a simple reboot of their computer has resolved the issue. This is often the first step recommended as it can refresh the system and clear temporary glitches caused by the update.

- Manual Workaround: If a standard reboot fails to resolve the BSOD, CrowdStrike suggests more detailed manual intervention. This involves booting the system into Safe Mode or the Windows Recovery Environment, navigating to 'C:\Windows\System32\drivers\CrowdStrike', and deleting the file named 'C-00000291*.sys'. This file is associated with the faulty update and its removal can disable the problematic functionality within CrowdStrike, as well as other third-party drivers that might be contributing to the BSOD.

- Alternative Solutions - Multiple Reboots: Microsoft has advised users to reboot their systems multiple times, up to 15 iterations, especially for those using Azure cloud services. This approach is based on the idea that successive reboots can help the system to reconfigure itself and apply necessary patches or rollbacks incrementally. Similarly, Amazon Web Services (AWS) has endorsed this recommendation for its users experiencing similar issues.

4. Continuous Reboot Cycles (Boot Loops):

- The severity of the crashes forced many systems into continuous reboot cycles. During these boot loops, the system would attempt to restart but fail to complete the boot process due to the unresolved memory corruption.

- This resulted in an endless loop of crashes and reboots, making the affected devices unusable and requiring manual intervention to break the cycle.

5. Impact on User Accessibility:

- The boot loops and system crashes left users unable to access their devices. This was particularly disruptive for industries and sectors that relied heavily on these systems for their operations.

- The inability to use these devices until the issue was fixed exacerbated the disruption caused by the faulty update.

How Did the Incident Disrupt Our Technical Systems?

What Immediate Technical Challenges Did We Face?

The CrowdStrike global outage presented several immediate technical challenges, which required rapid and coordinated responses to mitigate the widespread disruption.

- System Instability and Accessibility: The primary challenge was the system instability caused by the out-of-bounds memory condition. Affected devices entered continuous reboot cycles (boot loops), making it impossible for users to access their systems. Restoring stability required urgent manual interventions.

- Data Integrity and Recovery: The illegal memory operations resulted in data corruption. IT teams had to ensure the integrity of system data by verifying and, if necessary, repairing essential data structures to prevent further damage.

- Manual Recovery Processes: Users needed guidance through complex manual recovery steps, such as booting into Safe Mode or using the Windows Recovery Environment to delete specific problematic files. These steps were crucial to halt the boot loops and restore normal operation.

- Coordination and Communication: Coordinating a global response across various sectors and organizations posed a significant logistical challenge. Effective communication channels were essential to disseminate recovery instructions and provide support to millions of affected users.

Which Sectors were Affected by the Outage?

The outage caused widespread disruptions across various industries, leading to significant operational challenges and financial losses. The effects were felt deeply within aviation, finance, healthcare, media, retail, sports, and public services, as detailed below.

Aviation

In the aviation industry, airlines such as American Airlines, Delta, KLM, Lufthansa, Ryanair, SAS, and United experienced substantial operational disruptions. Critical systems responsible for scheduling, check-ins, and baggage handling were paralyzed, leading to delays and cancellations of numerous flights. The financial repercussions for these airlines were considerable, as they faced the costs of compensating affected passengers and managing the logistical chaos caused by the outage. Moreover, customer dissatisfaction soared as travelers encountered extensive delays and inconveniences, tarnishing the reputations of these airlines. Airports like Gatwick, Luton, Stansted, and Schiphol also faced severe system failures, which hampered passenger processing and security checks. The result was long queues and logistical challenges that compounded the delays and heightened the frustration for both passengers and airport staff.

Financial Institutions

The financial sector was equally impacted, with institutions such as the London Stock Exchange, Lloyds Bank, and Visa grappling with transaction processing issues. The instability caused by the update disrupted the seamless execution of stock trades and banking operations, raising significant security concerns regarding the integrity of financial data and transactions. Customers experienced difficulties accessing essential banking services, ATMs, and online platforms, which not only affected their daily financial activities but also eroded trust in these financial institutions. The outage underscored the critical nature of reliable IT infrastructure in maintaining the stability and security of financial operations.

Healthcare

Healthcare providers, including numerous GP surgeries and independent pharmacies, faced profound disruptions that compromised patient care. System outages prevented healthcare professionals from accessing electronic medical records, thereby delaying medical services and affecting the quality of care provided to patients. Pharmacies struggled with prescription processing and inventory management, further straining the healthcare system. The inability to maintain operational efficiency during the outage highlighted the dependence of modern healthcare services on robust and reliable IT systems.

Media

Media channels, including MTV, VH1, Sky, and BBC, experienced significant broadcast interruptions. The outage disrupted live broadcasts and content distribution, leading to gaps in programming that frustrated viewers and decreased viewership numbers. Advertising schedules were thrown into disarray, causing revenue losses for these media outlets. The disruption highlighted the vulnerability of media operations to IT failures and the critical importance of maintaining continuous, reliable service to retain audience engagement and advertising revenue.

Retail and Hospitality

Retail and hospitality sectors, including Gail’s Bakery, Ladbrokes, Morrisons, Tesco, and Sainsbury’s, faced numerous challenges due to the outage. Point-of-sale systems were rendered inoperative, complicating sales transactions and inventory management. Supply chain operations were also affected, leading to delays in restocking and logistical challenges that disrupted business continuity. The impact on customer service was immediate, as retailers and hospitality services struggled to maintain their standards, ultimately affecting their overall business performance and customer satisfaction.

Sports

Sports organizations, particularly F1 teams like Mercedes AMG Petronas, Aston Martin Aramco, and Williams Racing, encountered logistical challenges and operational delays. The outage disrupted communication and planning for races, complicating team coordination and affecting the overall efficiency of their operations. Additionally, the delay in delivering live sports content impacted fan engagement, highlighting the interconnected nature of sports and IT infrastructure.

Public Services

Public services, especially train operators such as Avanti West Coast, Merseyrail, Southern, and Transport for Wales, faced severe service suspensions and delays. The outage disrupted train scheduling and coordination, leading to significant inconveniences for commuters and travelers. The operational efficiency of these services was severely hampered, causing widespread public dissatisfaction and highlighting the critical need for resilient IT systems in maintaining essential public services.

What Was the Scale of the Impact on Windows Devices?

The scale of the impact was extensive, affecting approximately 8.5 million Windows devices globally. The disruptions were widespread, impacting various regions and sectors:

- Global Reach: The outage was not confined to a specific geographical area, affecting devices and operations across multiple continents. The global nature of the disruption highlighted the interconnectedness of modern IT infrastructures.

- Severity of Disruptions: The severity of the disruptions varied, with some regions experiencing complete system failures and others facing intermittent issues. The widespread impact necessitated a coordinated global response to manage the crisis.

Analysis of the Outage

Why Apple and Linux were Unaffected?

The CrowdStrike outage primarily impacted Windows systems, leaving Apple macOS and Linux systems unaffected. This discrepancy arises from the differences in how CrowdStrike's Falcon sensor integrates with each operating system. The specific update that caused the issue was related to named pipe execution, a feature unique to Windows. Named pipes are used for inter-process communication on Windows, allowing processes to communicate with each other seamlessly. This particular update aimed to enhance the security and evaluation of these communications, but a logic error within the update caused the sensor to crash, leading to system-wide failures.

On Windows, the Falcon sensor operates as a high-privilege process deeply integrated into the kernel. This integration grants it extensive access to system resources, which is crucial for its security functions but also means any faults can have catastrophic consequences. When the faulty update triggered an out-of-bounds memory condition, it led to the corruption of essential system data, causing the infamous Blue Screen of Death (BSOD) and continuous reboot cycles, or boot loops.

In contrast, the Falcon sensor's integration with macOS and Linux differs significantly. These operating systems use different methods for inter-process communication and handle kernel-level security processes differently. Therefore, the specific logic error in the update did not affect these systems. Additionally, in June, a similar issue occurred on Linux, causing a kernel panic. However, the response and resolution were swift, and the incident did not escalate to the level of disruption seen with the Windows outage. This incident highlighted the different risk profiles and response mechanisms across operating systems.

Recovery Efforts

Timeline and Process

When the outage struck on July 19, 2024, CrowdStrike's response was swift. Within 79 minutes of identifying the root cause of the system crashes, the company had developed and deployed a fix. However, despite the rapid response, the complexity of the issue meant that the recovery process for many businesses was far from straightforward.

The faulty update had integrated deeply into the Windows kernel, causing a severe out-of-bounds memory condition that required manual intervention to resolve. Businesses had to undertake the labor-intensive task of booting affected systems into Safe Mode or the Windows Recovery Environment (WinRE). This was necessary to navigate to specific system directories and delete the problematic configuration file that had been updated. For many IT teams, this process was not only time-consuming but also technically challenging, particularly for organizations with large numbers of affected devices.

For businesses with encrypted drives, the recovery efforts were further complicated by the need to handle BitLocker encryption. BitLocker, a full disk encryption feature included with Windows, requires a recovery key to unlock encrypted drives when booting into Safe Mode or WinRE. Many IT departments had to locate and manage these recovery keys for numerous devices, adding to the delays and complexity of the recovery process.

Duration of Recovery

The duration of the recovery process varied significantly among affected organizations, depending on the size and complexity of their IT infrastructure. For some businesses with smaller IT environments and fewer affected devices, recovery could be achieved within a matter of days. These organizations typically had more straightforward recovery processes, fewer devices to manage, and less complex IT environments, allowing them to quickly identify the issue and implement the necessary fixes.

However, for larger organizations with extensive IT infrastructure, the recovery process was much more prolonged. Enterprises with thousands of affected devices, extensive use of encryption, and complex IT environments faced a monumental task. These organizations had to coordinate recovery efforts across multiple locations, manage a large volume of BitLocker recovery keys, and ensure that all affected devices were properly restored to operational status.

In such cases, the recovery process could extend to weeks or even months. Large-scale businesses had to allocate significant resources, including additional IT staff and external support, to manage the recovery efforts. The logistical challenges of coordinating recovery across multiple departments and locations added to the delays.

Additionally, the impact of the outage on critical business operations further extended the recovery timeline. Organizations in sectors like finance, healthcare, and aviation, which rely heavily on real-time data and continuous operation, faced significant challenges in restoring their systems to full functionality. The need to ensure data integrity, validate system performance, and resume normal operations without further disruptions required meticulous planning and execution.

Furthermore, the incident highlighted the importance of having robust disaster recovery and business continuity plans in place. Organizations with well-documented and regularly tested recovery plans were better equipped to manage the crisis and recover more swiftly. In contrast, those without such plans faced greater difficulties, underscoring the need for proactive preparedness in IT management.

Malicious Exploitation

Hacker Activity

In the immediate aftermath of the CrowdStrike Falcon sensor outage on July 19, 2024, malicious actors quickly capitalized on the chaos and confusion created by the widespread IT disruption. As businesses and individuals scrambled to address the system crashes and restore normal operations, cybercriminals saw an opportunity to exploit the situation for their gain.

Phishing Emails

One of the most common forms of exploitation was through phishing emails. Cybercriminals sent out a flurry of emails masquerading as official communications from CrowdStrike, IT support teams, or other trusted entities. These emails often contained urgent messages, claiming to provide critical updates, patches, or instructions to resolve the BSOD issue. They would include malicious links or attachments designed to compromise recipients' systems further.

For instance, many of these phishing emails contained attachments labeled as "CrowdStrike Patch" or "Urgent Update," which, when opened, deployed malware onto the victims' computers. Others included links directing recipients to fake login pages, designed to harvest credentials and gain unauthorized access to corporate networks.

Fake Support Calls

Alongside phishing emails, cybercriminals also launched sophisticated vishing (voice phishing) campaigns. They made unsolicited phone calls to affected organizations, posing as technical support representatives from CrowdStrike or other trusted IT support services. During these calls, they provided convincing explanations of the outage and offered assistance in resolving the issue.

These fake support calls were particularly dangerous as they often led to victims granting remote access to their systems. The scammers would instruct the targets to install remote access software, allowing the attackers to take control of the systems, exfiltrate sensitive data, deploy ransomware, or further disrupt operations under the guise of providing help.

Bogus Recovery Scripts

Another method of exploitation involved the distribution of bogus recovery scripts. Cybercriminals posted these scripts on various online forums, social media platforms, and even sent them directly to affected organizations. These scripts were marketed as quick fixes or automated solutions to remove the faulty update and restore system functionality.

In reality, these scripts contained malicious code designed to compromise the systems further. Once executed, they could install backdoors, keyloggers, or other forms of malware, providing attackers with persistent access to the infected machines. Additionally, some scripts were crafted to irreversibly damage system files, rendering recovery even more difficult.

CrowdStrike's Response

In response to the surge in malicious activities, CrowdStrike issued multiple advisories to their customers, emphasizing the importance of following official recovery instructions. They urged organizations to be vigilant and skeptical of any unsolicited communications, whether via email, phone, or online forums. CrowdStrike provided a centralized repository of recovery instructions and updates on their official website and recommended that users verify the authenticity of any guidance they received.

CrowdStrike also collaborated with law enforcement agencies and cybersecurity firms to identify and take down malicious websites, phishing campaigns, and other fraudulent activities. They implemented additional security measures, such as enhanced email filtering, to protect their customers from phishing attempts and coordinated with ISPs to block known malicious IP addresses involved in vishing attacks.

Impact on Organizations

The exploitation by cybercriminals during this period added another layer of complexity and urgency to the recovery efforts of affected organizations. IT teams, already stretched thin by the task of manually restoring systems, now had to deal with the threat of potential security breaches and additional malware infections. This not only slowed down the recovery process but also increased the risk of data loss, unauthorized access, and further operational disruptions.

Organizations had to implement enhanced security monitoring and incident response protocols to detect and mitigate any additional threats introduced by these malicious activities. The necessity to remain vigilant against phishing emails, fake support calls, and bogus scripts became a critical aspect of the overall recovery strategy, highlighting the multifaceted nature of dealing with such large-scale IT incidents.

Lessons Learned

The CrowdStrike outage highlights several critical lessons for the IT industry:

- The Risks of Reliance on a Single Vendor: The outage underscores the dangers of relying on a concentrated pool of IT vendors for critical cybersecurity infrastructure. When a single point of failure, such as a faulty update from a major vendor, can disrupt millions of systems globally, it becomes evident that diversification in cybersecurity solutions is essential. Organizations should consider implementing multi-vendor strategies to mitigate risks and ensure continuous protection even if one vendor experiences issues.

- Importance of Robust Testing Procedures: The incident emphasizes the necessity of thorough testing and validation processes for software updates. This includes developer testing, update and rollback testing, stress testing, fuzzing, fault injection, and stability testing. Ensuring that updates are rigorously tested in varied environments can help identify potential issues before they reach end-users.

- The Need for Disaster Recovery Planning: Effective disaster recovery and business continuity planning are crucial for minimizing the impact of IT disruptions. Organizations should regularly test their disaster recovery plans, ensuring that they can swiftly respond to incidents and restore normal operations. This includes maintaining comprehensive backups, having alternative communication channels, and ensuring that all stakeholders are aware of their roles during an outage.

- Enhanced Update Validation: CrowdStrike's experience demonstrates the need for improved validation checks in update processes. Implementing stringent validation mechanisms can help detect and prevent faulty updates from being deployed. Additionally, staggered updates and canary deployments, where updates are initially rolled out to a small subset of users, can help identify issues before they affect a broader user base.

- Customer Control over Updates: Providing customers with more control over update delivery can be beneficial. Allowing organizations to schedule updates, test them in sandbox environments, and deploy them at convenient times can reduce the risk of widespread disruptions. It also enables IT teams to manage updates more effectively and ensure compatibility with their specific environments.

Overall, the CrowdStrike outage serves as a critical reminder of the complexities and risks associated with managing IT infrastructure. By learning from this incident and implementing robust preventive measures, organizations can enhance their resilience and better protect themselves against future disruptions.

Preparedness for Future Outages

Test Updates Before Deployment

One of the most crucial lessons from the CrowdStrike outage is the importance of thoroughly testing updates before deploying them to production environments. Especially for mission-critical systems, it's imperative to implement a robust testing strategy that includes:

- Staging Environments: Before rolling out updates across an organization, they should be tested in staging environments that mirror the production setup. This allows IT teams to identify potential issues in a controlled setting without risking widespread disruption.

- Automated Testing: Utilize automated testing tools to perform regression testing, stress testing, and compatibility checks. Automated tests can quickly identify anomalies or conflicts introduced by new updates.

- User Acceptance Testing (UAT): Engage end-users in the testing process to ensure that the update performs as expected under real-world conditions. User feedback can provide valuable insights into potential issues that automated tests might miss.

- Phased Rollouts: Implement a phased rollout approach, starting with a small subset of users or devices. Monitor the performance and address any issues before expanding the rollout to the entire organization. This method minimizes the risk of widespread impact and allows for quicker resolution of problems.

Develop Manual Workarounds

In the event of an outage, having documented and practiced manual procedures ensures business continuity. Developing manual workarounds involves:

- Comprehensive Documentation: Create detailed documentation for critical processes that can be manually executed if automated systems fail. This includes step-by-step guides, necessary tools, and contact information for support personnel.

- Regular Drills: Conduct regular drills and training sessions to familiarize staff with manual procedures. This ensures that employees are prepared to execute these tasks efficiently during an actual outage.

- Backup Communication Channels: Establish alternative communication methods to coordinate efforts during outages. This could include secure messaging apps, radio communications, or designated emergency hotlines.

- Cross-Training: Ensure that multiple team members are trained in executing manual procedures to avoid dependency on a single individual. Cross-training increases resilience and reduces the risk of operational delays during outages.

Disaster Recovery Planning

Implementing robust disaster recovery (DR) plans is vital for minimizing downtime and ensuring critical functions can switch to backups as needed. Key components of an effective DR plan include:

- Redundant Systems and Infrastructure: Deploy redundant systems and infrastructure to ensure critical operations can continue in the event of a primary system failure. This includes backup servers, network redundancy, and alternative power supplies.

- Regular Backups: Perform regular backups of critical data and systems. Ensure that backups are stored in multiple locations, including off-site or cloud storage, to protect against physical disasters.

- Recovery Point Objectives (RPO) and Recovery Time Objectives (RTO): Define RPO and RTO for different systems and applications. RPO specifies the maximum acceptable data loss, while RTO defines the maximum acceptable downtime. Tailor the DR plan to meet these objectives.

- Testing and Updating DR Plans: Regularly test and update the DR plan to address emerging threats and changing business needs. Conduct simulated disaster scenarios to identify weaknesses and improve response strategies.

- Business Continuity Plans (BCP): Integrate DR plans with broader business continuity plans. BCPs ensure that all aspects of the organization, including human resources, supply chain, and customer communications, are considered during disaster recovery efforts.

Embrace Cybersecurity Best Practices

The CrowdStrike incident also highlighted the importance of cybersecurity in mitigating risks during outages. Key cybersecurity practices include:

- Advanced Threat Detection: Utilize advanced threat detection and response tools to monitor for suspicious activity and respond to potential threats in real-time.

- Employee Training: Conduct regular cybersecurity training for employees to raise awareness about phishing, social engineering, and other common attack vectors. Educated employees are better equipped to recognize and avoid cyber threats.

- Incident Response Planning: Develop and maintain a comprehensive incident response plan that outlines procedures for identifying, containing, and mitigating cyber incidents. Regularly test the plan through tabletop exercises and simulations.

- Vendor Management: Ensure that third-party vendors adhere to stringent cybersecurity standards. Conduct regular audits and assessments to verify their security posture and minimize supply chain risks.

Enhanced Monitoring and Reporting

Proactive monitoring and reporting can help organizations identify potential issues before they escalate into full-blown outages. Effective monitoring practices include:

- Real-Time Alerts: Implement real-time monitoring tools that provide immediate alerts for unusual activity, performance degradation, or system errors. Timely alerts enable IT teams to address issues promptly.

- Comprehensive Dashboards: Use comprehensive dashboards that consolidate data from various sources, providing a holistic view of the IT environment. Dashboards help identify patterns and trends that may indicate underlying problems.

- Regular Audits: Conduct regular audits of systems, configurations, and security controls to ensure they are up-to-date and compliant with industry standards. Audits help identify vulnerabilities and areas for improvement.

- Performance Metrics: Establish key performance metrics (KPIs) to measure system health, availability, and response times. Regularly review these metrics to assess the effectiveness of IT operations and identify potential risks.

Leveraging Cloud Technologies

Cloud technologies can enhance resilience and scalability, providing organizations with additional options for disaster recovery and business continuity:

- Cloud Backups: Utilize cloud-based backup solutions to ensure data is securely stored and easily accessible from multiple locations. Cloud backups offer flexibility and scalability compared to traditional on-premises solutions.

- Disaster Recovery as a Service (DRaaS): Consider using DRaaS to leverage cloud providers' infrastructure and expertise for disaster recovery. DRaaS solutions offer rapid failover capabilities and simplified management.

- Hybrid Cloud Solutions: Implement hybrid cloud solutions that combine on-premises infrastructure with cloud resources. Hybrid environments offer flexibility and redundancy, allowing organizations to optimize workloads and ensure continuity.

By incorporating these recommendations and best practices, organizations can significantly improve their preparedness for future outages and minimize the impact of unexpected disruptions on their operations. The CrowdStrike incident serves as a stark reminder of the importance of proactive planning, robust testing, and comprehensive cybersecurity measures in maintaining business resilience.

Exploring Alternate Approaches - Industry Perspective

Embracing Microservices Architecture: Microservices for Improved Resilience

- Decoupling Services: Transitioning from monolithic applications to microservices architecture can isolate and contain failures within individual services, preventing widespread system disruptions.

- Scalability and Flexibility: Microservices enable organizations to scale specific components independently, allowing for more efficient resource management and reducing the impact of any single point of failure.

- Continuous Delivery: This architecture supports continuous integration and delivery practices, allowing for faster and more reliable deployment of updates.

Implementing Edge Computing Solutions - Leveraging Edge Computing

- Local Processing: Deploying edge computing solutions can process data closer to its source, reducing latency and dependency on centralized data centers. This can enhance performance and reliability, especially during outages.

- Decentralized Infrastructure: Edge computing distributes workloads across multiple nodes, providing redundancy and minimizing the risk of complete system failures.

Utilizing AI and Machine Learning for Predictive Maintenance: Predictive Maintenance with AI

- Anomaly Detection: Implement AI and machine learning algorithms to detect anomalies and predict potential system failures before they occur. This proactive approach can prevent outages and optimize maintenance schedules.

- Automated Responses: AI-driven systems can automatically trigger predefined responses to identified issues, such as rerouting traffic, scaling resources, or alerting IT teams, thereby reducing downtime and mitigating impacts.

Adopting Zero Trust Security Models - Zero Trust Architecture

- Enhanced Security Posture: Implementing a Zero Trust security model ensures that no user or device is trusted by default, even if they are within the network perimeter. This minimizes the risk of unauthorized access and potential exploits during outages.

- Continuous Verification: Continuous authentication and authorization processes verify each access request, enhancing security and reducing the likelihood of successful attacks during periods of system vulnerability.

Diversifying IT Vendors and Solutions - Vendor Diversification

- Reducing Single Points of Failure: Relying on a diverse set of IT vendors and solutions can reduce the risk associated with vendor-specific outages. Organizations can implement multi-vendor strategies to ensure redundancy and resilience.

- Cross-Platform Compatibility: Ensuring that systems and applications are compatible with multiple platforms can facilitate easier transitions and minimize disruptions during vendor-specific issues.

Enhancing Collaboration with Managed Service Providers (MSPs) - Partnerships with MSPs

- Expertise and Resources: Collaborating with managed service providers offers access to specialized expertise and additional resources, enhancing an organization’s ability to respond to and recover from outages.

- Scalable Solutions: MSPs provide scalable solutions that can quickly adapt to changing needs and support disaster recovery efforts with their infrastructure and capabilities.

Utilizing Blockchain for Secure Data Management - Blockchain Technology

- Immutable Records: Blockchain technology ensures data integrity through immutable records, which can be particularly useful for maintaining accurate logs and audit trails during and after outages.

- Decentralized Networks: Blockchain's decentralized nature can enhance data availability and security, reducing the risk of data loss or corruption during system failures.

Developing Autonomous Systems - Autonomous IT Systems

- Self-Healing Capabilities: Autonomous systems equipped with self-healing capabilities can automatically detect, diagnose, and recover from failures without human intervention, significantly reducing downtime.

- Adaptive Responses: These systems can adapt to changing conditions in real-time, optimizing performance and maintaining continuity even in the face of disruptions.

By exploring and implementing these alternative approaches, organizations can enhance their resilience and preparedness for future outages. Diversifying strategies and leveraging emerging technologies can provide robust solutions to minimize the impact of unexpected disruptions and ensure the continuity of critical operations.

Conclusion:

The recent outage caused by a faulty update to CrowdStrike's Falcon platform underscores the critical importance of thorough update testing and robust IT infrastructure. The incident, which led to widespread BSOD errors on Windows systems due to an out-of-bounds memory condition, serves as a stark reminder for both cybersecurity providers and industries relying on their services. Providers must ensure rigorous pre-deployment testing and swift issue resolution, while industries must maintain diversified and resilient IT strategies to mitigate the risks of dependency on single-source solutions. This event highlights the necessity for continuous monitoring, proactive defense mechanisms, and comprehensive contingency planning to safeguard against similar disruptions in the future.

. . .

References:

- https://economictimes.indiatimes.com/

- https://www.businesstoday.in/

- https://www.computerweekly.com/

- https://frontline.thehindu.com/

- https://www.cnbctv18.com/

- https://www.bbc.com/

- https://www.newindianexpress.com/

- https://www.nytimes.com/

- https://www.vox.com/

- https://www.csis.org/